This Project is a result of research conducted during my summer internship in the Kumar Robotics Lab at UPenn

Typically, one can view Point Cloud data as being a set where the order of it's constituents does not effect the information that it contains

This means that Point Clouds are invariant under permutations of their constituents (i.e. the individual points).

This presents challenges in developing effective Learning based methods on them. Particularly, Neural Networks cannot be trained directly on unordered sets

In this Paper (Pending submission in CVPR 2023), we device novel learning bsed frameworks to directly train on unordered data like point clouds

Generally Point clouds are converted to Voxel grids or range images, but such representations are wasteful in terms of their computation and space requirements.

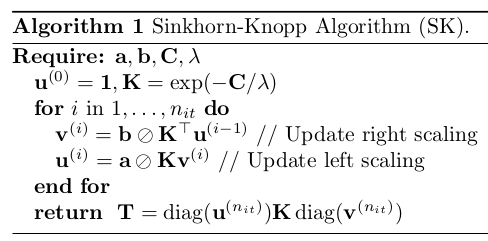

As the Loss we use the Sinkhorn distance (the Wasserstein distance calculated using the Sinkhorn-Knopp Algorithm)

We used the following Learning blocks in the proposed Neural Network

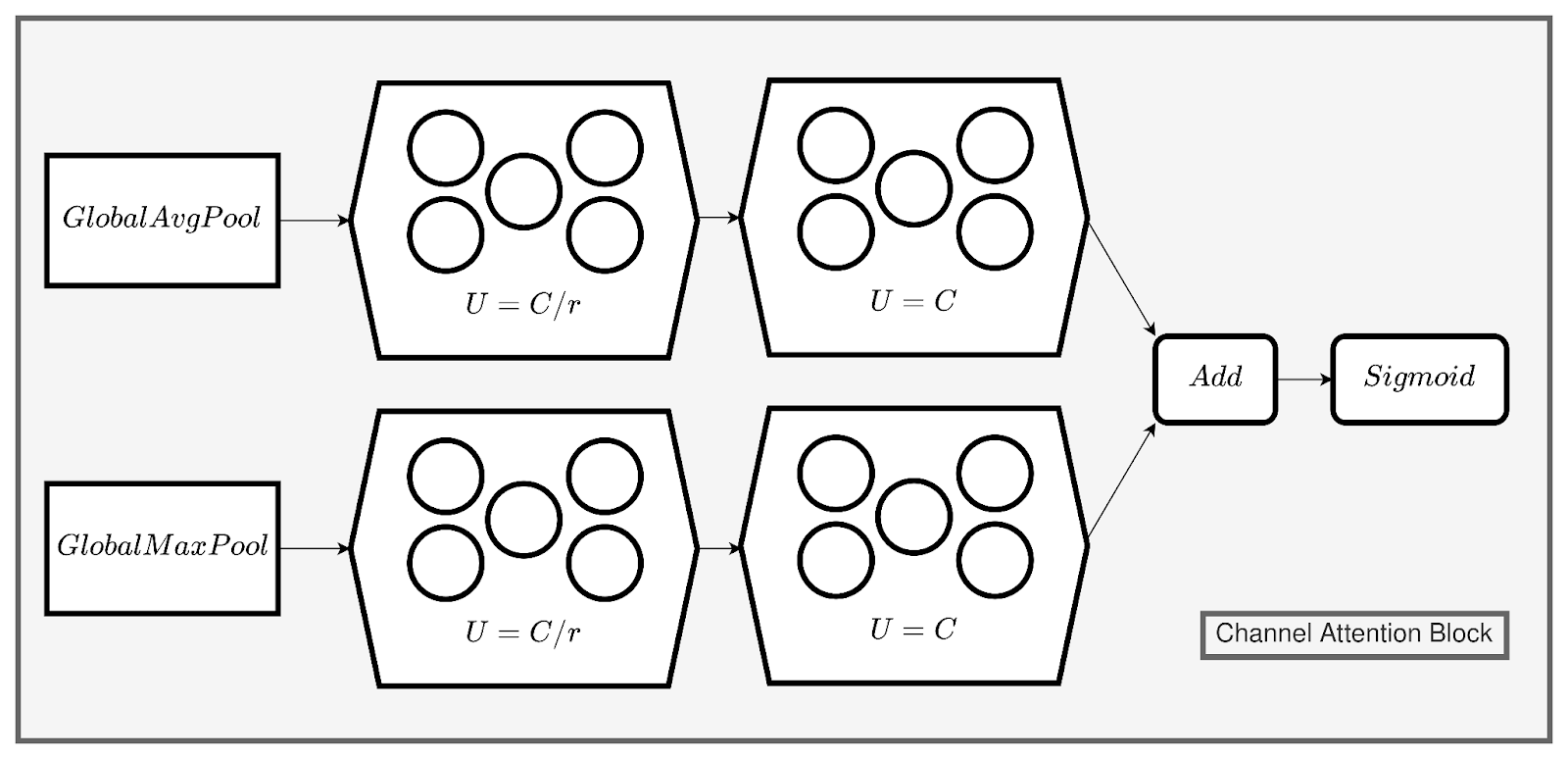

Channel Attention Block

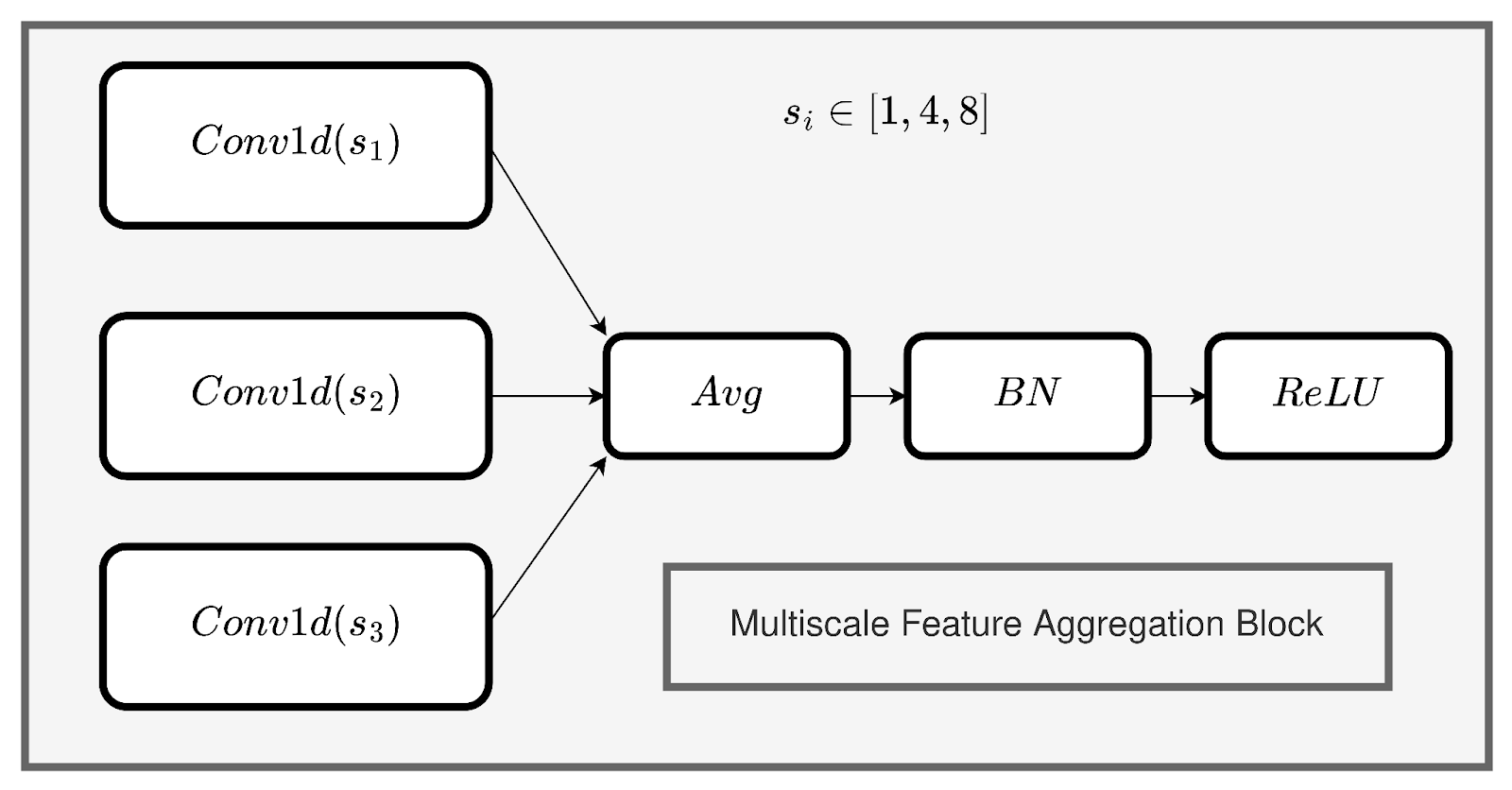

Multiscale Feature Aggregation Block

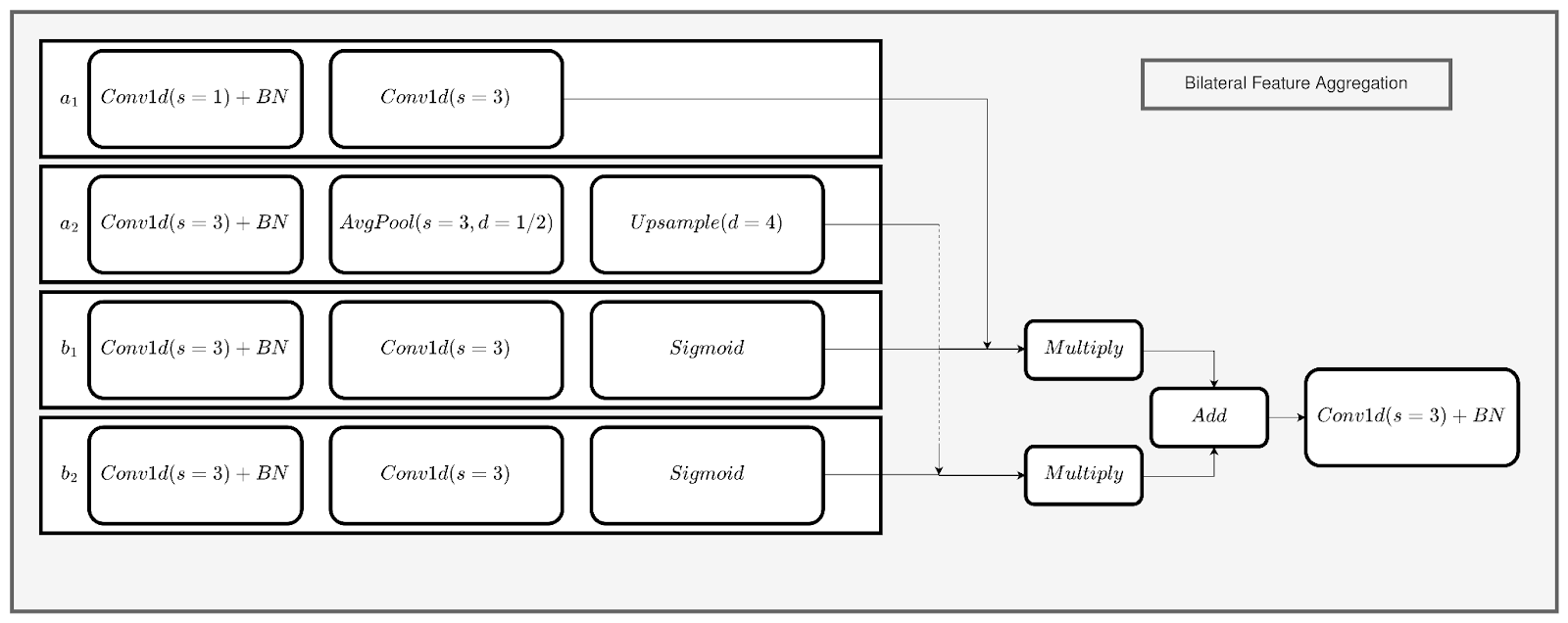

Bilateral Feature Aggregation Block

Attentive Rechecking Block

I show the Slides that I presented at the end of my research internship for readers who want a more rigorous understanding of the problem and the proposed solution

Slides

Experiment (Point Cloud Auto-Encoding):

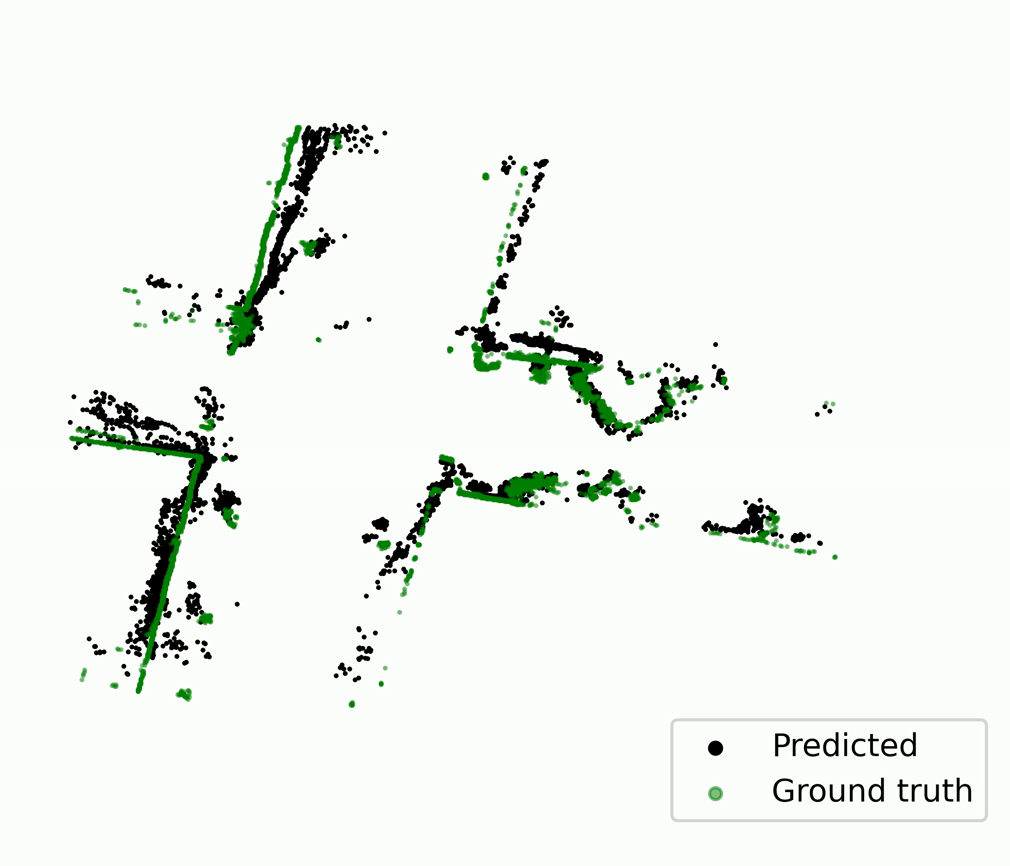

Experiment (2D Occupancy Prediction from projected Point Clouds):

Video Demonstration (2D Occupancy Prediction from projected Point Clouds):

Top : Predictions

Bottom : Ground Truth