This project explores the possibility of adding details to semantic information in images. This procedure has many potential applications, ranging from the robotics industry (for bulk synthetic data for training), to other industries like fashion, art, generative design.

The networks used here is a GAN that is composed of two components. The first is the Efficient Net b4 based encoder, and a Patch GAN based discriminator.

The encoder captures important spatial and representational features from the segmented image (fed as input), and the decoder then adds details to the various aspects of the image.

One interpretation of this process is that, the encoder captures the localization sites of superficial details of the image, whereas the decoder captures the context and the superficial details themselves.

Another implementation of a similar framework is to transfer style (in this case transfer the design) of an object onto another object.

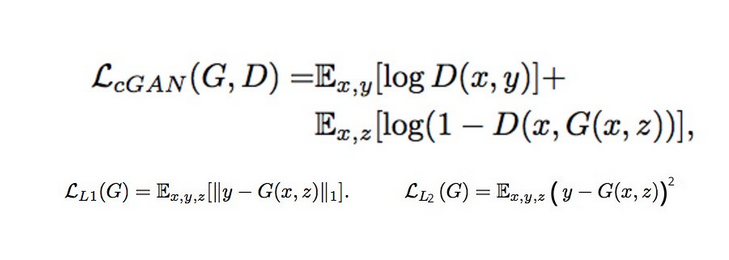

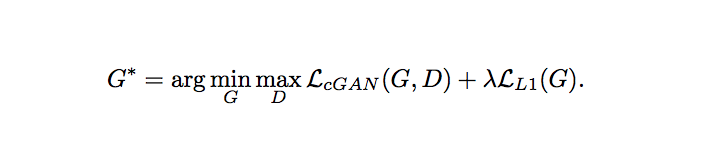

Loss Functions Used :