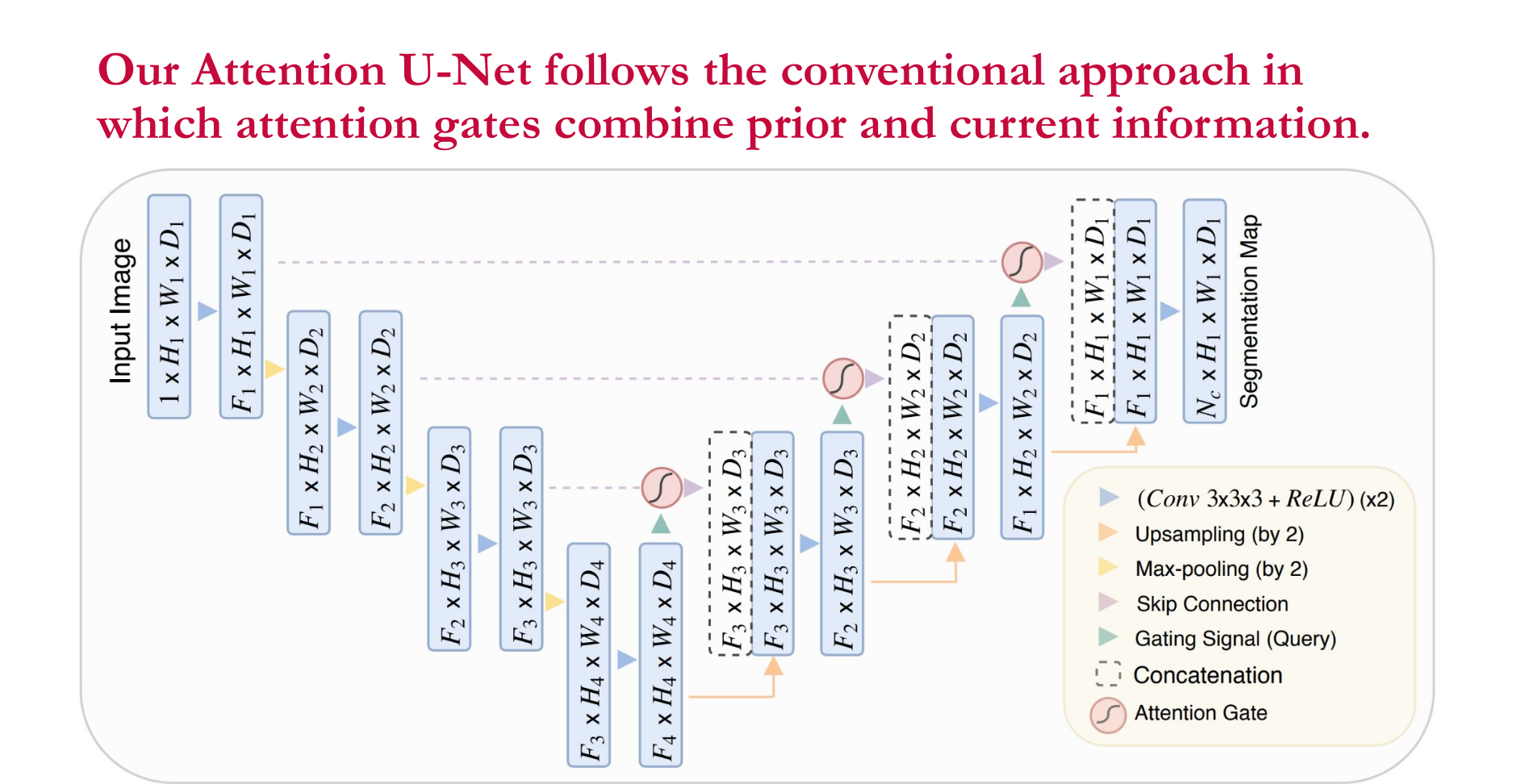

In this Project, I explored the possibility of generating raw point clouds from RGB Images (The shown representation is a Grayscale image). This problem is unlike simpler image generation problems due to the nature of the output image (mostly sparse). For efficiency, special loss functions were formulated to expedite the training process.

There is great potential for generating raw depth data from RGB images (point clouds). This approach can be extremely efficient considering that the clouds are mostly sparse representations.

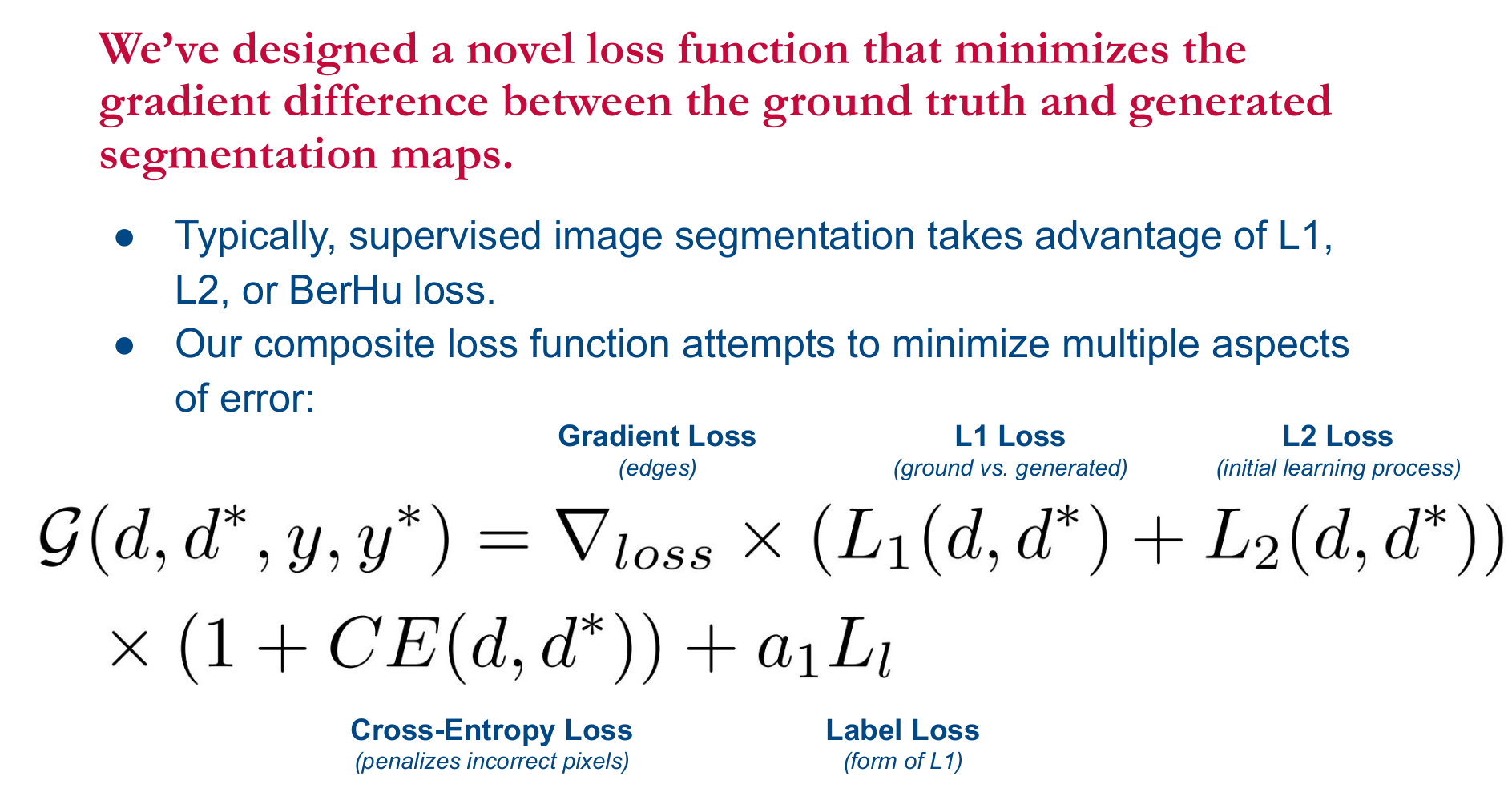

It was found during this project that loss functions like cross entropy performed better than, L1 or L2 loss. Furthermore special implementations of the loss that measured the difference between the predicted number of points and the ground truth number of points were also used.

I am working on another implementation of this idea using a genetic algorithm as the optimizer. This will allow for non-differentiable loss formulations.

Media: